user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

upstream vacuumflask {

server staticendpoint:8080 max_fails=3 fail_timeout=30s;

server dynamicendpoint:8080 max_fails=3 fail_timeout=30s;

least_conn; # Least connections

}

upstream blog1

{

server dynamicendpoint:80 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name blog4.cmh.sh;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl;

server_name blog.cmh.sh blog4.cmh.sh;

location / {

proxy_pass http://vacuumflask;

proxy_set_header Host $host; # Add this line to forward the original host

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

#proxy_set_header Host $host;

ssl_certificate /tmp/ssl/wild.cmh.sh.crt;

ssl_certificate_key /tmp/ssl/wild.cmh.sh.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 120m;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

# Basic security headers

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

}

server {

listen 443 ssl;

server_name cmh.sh ots.cmh.sh;

location / {

proxy_pass http://blog1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host; # Add this line to forward the original host

}

#proxy_set_header Host $host;

ssl_certificate /tmp/ssl/wild.cmh.sh.crt;

ssl_certificate_key /tmp/ssl/wild.cmh.sh.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 120m;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

# Basic security headers

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

}

server {

listen 443 ssl;

server_name oldblog

location / {

proxy_pass http://blog1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host; # Add this line to forward the original host

}

#proxy_set_header Host $host;

ssl_certificate /tmp/ssl/wild.oldblog.dev.25.pem;

ssl_certificate_key /tmp/ssl/wild.oldblog.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 120m;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECtail -f /var/log/nginx/error.log

tail -f /var/log/nginx/access.log

DHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

# Basic security headers

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

}

}

deploy nginx and dns config for container restart

#!/bin/bash

destination="vacuum-lb-push"

zipPrefix="vacuum-lb-"

zipFileName="$zipPrefix$(date +%Y%m%d).zip"

mkdir $destination

while IFS= read -r file; do

cp $file $destination/$file

done < ship_list.txt

cd $destination

zip -r $zipFileName *

mv $zipFileName ../.

cd ../

rm -rf $destination

scp $zipFileName project:blog2/$zipFileName

ssh project "cd /media/vacuum-data/vacuum-lb; sudo mv /home/ubuntu/blog2/$zipFileName /media/vacuum-data/vacuum-lb/$zipFileName; sudo bash unpack.sh $zipFileName"

rm $zipFileName

- enable ip6 on the vpc

- calculate ip6 sub-net masks

- create new sub-nets that support ip 6

- create an “egress only gateway (ip6 only)”

- Add a route to allow internet traffic out through the new egress gateway.

What I quickly discovered after removing my public ip4 addresses in a vpc/subnet with no Nat-gateways (not nat gateway to save $) was that a lot of AWS services do not yet support ip6. The one that was my biggest issue was the elastic container registry.

My next project over break was to try containerize all of my old websites that were being hosted on my project box. The process of getting apache / php and my dependency chain for my project websites ended up taking several days. Much to my surprise, the tool that ended up being very useful was Amazon Q. It provided to be more useful than ChatGPT when it came to getting apache, PHP, and my dependency chain to cooperate.

Once I got my old websites containerized I set about going after some nice to haves. I set up an auto dns/tag updater so that my spawned instances would follow the pattern that would make them easy to manage. This would also allow me to eventually have totally dynamic hosting for my ECS tasks, clusters, and all of my docker needs.

#!/bin/bash version=$(date +"%Y%m%d%H%M%S") docker build --tag blog.myname.dev:$version . success=$(docker images | grep -w $version) if [ -z "$success" ]; then echo "build failed" exit 1 fi imageid=$(docker image ls | grep -w "blog.myname.dev" | awk '{print $3}') #echo "new imageid: $imageid" lastimage=$(head -1 <<< $imageid) old_containers=$(docker ps -a | grep -w "blog.myname.dev"| grep -w Exited | grep -E "months|weeks|days|hours" | awk '{print $1}') while IFS= read -r instance do docker container rm "$instance" done <<< "$old_containers" echo "cleaning up old images" while IFS= read -r image; do if [ "$image" != "$lastimage" ]; then echo "removing image: $image" docker rmi $image fi done <<< "$imageid" echo "last imageid: $lastimage" created=$(docker images | grep -w $lastimage | awk '{print $4}') echo "created: $created"#!/bin/bash img=$( docker images | grep blog.myname.dev | head -1 | awk '{print $3}') echo "running image $img" docker run -p 80:80 \ --volume /home/colin/projectbox/var/www/blog/blogdata:/var/www/blog/blogdata \ --volume /home/colin/projectbox/var/www/blog/logs:/var/log \ -e AWS_REGION=US-EAST-1 -td "$img" echo "waiting for container to start" sleep 5 contid=$(docker ps -a | grep "$img" | awk '{print $1}') echo "container id is $contid" status=$(docker ps -a | grep "$contid" | awk '{print $7}') echo "container status is $status" if [ "$status" != "Up" ]; then echo "container failed to start" docker logs "$contid" echo "removing container" docker rm "$contid" fi#!/bin/bash ZONE_ID="myzoneid" PATTERN="internal.cmh.sh." # Get all records and filter for internal.cmh.sh entries dnslist=$(aws route53 list-resource-record-sets \ --hosted-zone-id "$ZONE_ID" \ --query "ResourceRecordSets[?ends_with(Name, '$PATTERN')].[ResourceRecords[].Value | [0], Name]" \ --profile vacuum \ --output text | sed 's/\.$//') instancelist=$(aws ec2 describe-instances \ --filters "Name=tag:Name,Values=*.internal.cmh.sh" "Name=instance-state-name,Values=running" \ --query 'Reservations[].Instances[].[LaunchTime,PrivateIpAddress,Tags[?Key==`Name`].Value | [0]]' \ --output text --profile vacuum --region us-east-1 | sort) defaultinstances=$(aws ec2 describe-instances \ --filters "Name=tag:Name,Values=Vacuum-Server" "Name=instance-state-name,Values=running" \ --query 'Reservations[].Instances[].[LaunchTime,PrivateIpAddress,Tags[?Key==`Name`].Value | [0]]' \ --output text --profile vacuum --region us-east-1 | sort) instanceCount=$(wc -l <<< "$instancelist") echo "dns list: $dnslist" echo "instance list: $instancelist" bash update_dns_loop.sh "$instancelist" $instanceCount bash update_dns_loop.sh "$defaultinstances" $instanceCount#!/bin/bash if [ $# -ne 2 ]; then echo "Usage:" echo "data format: " exit 1 fi echo $1 | while read -r launchTime privateIp name; do echo "Checking $name ($privateIp)" if grep -q "$name" <<< "$dnslist"; then echo " DNS record exists" if grep -q "$privateIp" <<< "$dnslist"; then echo " IP address matches" else echo " IP address does not match" sh update_internal_dns.sh $name $privateIp fi else echo " DNS record does not exist" if [ $name == "Vacuum-Server" ]; then #will not work if more than one instance was spun up. name="vacuumhost$2.internal.cmh.sh" sh update-ec2-name-from-priv-ip.sh $privateIp $name fi sh update_internal_dns.sh $name $privateIp fi done #!/bin/bash if [ $# -ne 2 ]; then echo "Usage: $0" exit 1 fi name=$1 privateIp=$2 aws route53 change-resource-record-sets \ --hosted-zone-id myzoneid \ --change-batch '{ "Changes": [ { "Action": "UPSERT", "ResourceRecordSet": { "Name": "'"$name"'", "Type": "A", "TTL": 300, "ResourceRecords": [ { "Value": "'"$privateIp"'" } ] } } ] }' --profile vacuum --output text #!/bin/bash #!/bin/bash if [ $# -ne 2 ]; then echo "Usage: $0" exit 1 fi PRIVATE_IP=$1 NEW_NAME=$2 INSTANCE_ID=$(aws ec2 describe-instances \ --filters "Name=private-ip-address,Values=$PRIVATE_IP" \ --query "Reservations[].Instances[].InstanceId" \ --output text --profile vacuum --region us-east-1) if [ -z "$INSTANCE_ID" ]; then echo "No instance found with private IP $PRIVATE_IP" exit 1 fi aws ec2 create-tags \ --resources $INSTANCE_ID \ --tags "Key=Name,Value=$NEW_NAME" \ --profile vacuum --region us-east-1 if [ $? -eq 0 ]; then echo "Successfully updated tag for instance $INSTANCE_ID" else echo "Failed to update tag" fi

#!/bin/bash

sudo yum update

sudo yum upgrade -y

#Install EFS utils

sudo yum install -y amazon-efs-utils

#sudo apt-get -y install git binutils rustc cargo pkg-config libssl-dev gettext

#git clone https://github.com/aws/efs-utils

#cd efs-utils

#./build-deb.sh

#sudo apt-get -y install ./build/amazon-efs-utils*deb

sudo yum -y install docker

sudo systemctl enable docker

sudo systemctl start docker

#sudo yum -y install boto3

if [ ! -d /media/vacuum-data ]; then

sudo mkdir /media/vacuum-data

fi

echo "fs-05863c9e54e7cdfa4:/ /media/vacuum-data efs _netdev,noresvport,tls,iam 0 0" >> /etc/fstab

#sudo systemctl daemon-reload

sudo mount -a

#docker start redis

aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin 631538352062.dkr.ecr.us-east-1.amazonaws.com

sudo docker pull 631538352062.dkr.ecr.us-east-1.amazonaws.com/cmh.sh:vacuumflask

sudo sh /media/vacuum-data/run.sh

#ECS related

if [ -d /etc/ecs ]; then

echo "ECS_CLUSTER=vacuumflask_workers" > /etc/ecs/ecs.config

echo "ECS_BACKEND_HOST=" >> /etc/ecs/ecs.config

#TODO: register with the alb?

fi

I’ve spent a few weekends playing around with AWS ALBs and AWS ECS. The ALB I got working after a while messing with the security groups and eventually I found where I needed to set the permissions to allow the ALB to log to s3. It turns out the permissions are in the s3 bucket policy and you have to allow an aws account access to your bucket to write the logs. With ECS, I’ve ran into a number of issues trying to get my blog into ECS with sufficient permissions to do the things it needed to do. What’s interesting about the ECS interface is that the best way to use it is with JSON from the command line. This has some inherit issues though because it requires you to put a lot of specific information in your JSON files for your environment. Ideally if you’re checking in your source code you wouldn’t be hard coding secrets in your source. After I got the basic environment working I moved all of my secrets out of environmental variables into secrets manager where they should have been to begin with. Along the way I have learned a lot more about containers and working with environmental variables and debugging in both containers and on local environments. The basic steps to get a container running in ecs:

- get the image uploaded to a container repo

- permissions / ports

- ecs task permissions

- ecs execution permissions

- security group access to the subnets and ports in play

- create your task definition

- create your service definition

- Redis for shared server session state

- Added health checks to the apache load balancer

- Added EFS, docker, and the required networking to the mail server.

- Fixed issue with deployment pipeline not refreshing image

- Limited main page to 8 posts and added links at the bottom to everything else so it will still get indexed.

#!/bin/bash

#Check if we have a parameter

if [ $# -eq 1 ]; then

#check if the parameter is a file that exists

if [ -f "$1" ]; then

unzip -o "$1"

rm "$1"

fi

fi

oldimage=$(docker images | grep -w vacuumflask | awk '{print $3}')

newimageid=$(sh build.sh | awk '{print $4}')

runninginstance=$(docker ps | grep -w "$oldimage" | awk '{print $1}')

docker kill "$runninginstance"

sh run.sh

nowrunninginstance=$(docker ps | grep -w "$newimageid" | awk '{print $1}')

docker ps

echo "new running instance id is: $nowrunninginstance"

Welp, the “new” blog is live. It’s running on python 3.12 with the latest build of flask and waitress. This version of my blog is containerized and actually uses a database. A very basic concept I know. I considered running the blog on the elastic container service and also as an elastic beanstalk app. The problem with both of those is that I don’t really need the extra capacity and I already have reserved instances purchased for use with my existing ec2 instances. I’m not sure how well flask works with multiple nodes, I may have to play around with that for resiliency sake. For now we are using apache2 as a reverse https proxy with everything hosted on my project box.

Todo items: SEO for posts, RSS for syndication and site map, fixing s3 access running from a docker container. Everything else should be working. There is also a sorting issue of the blog posts that I need to work out.

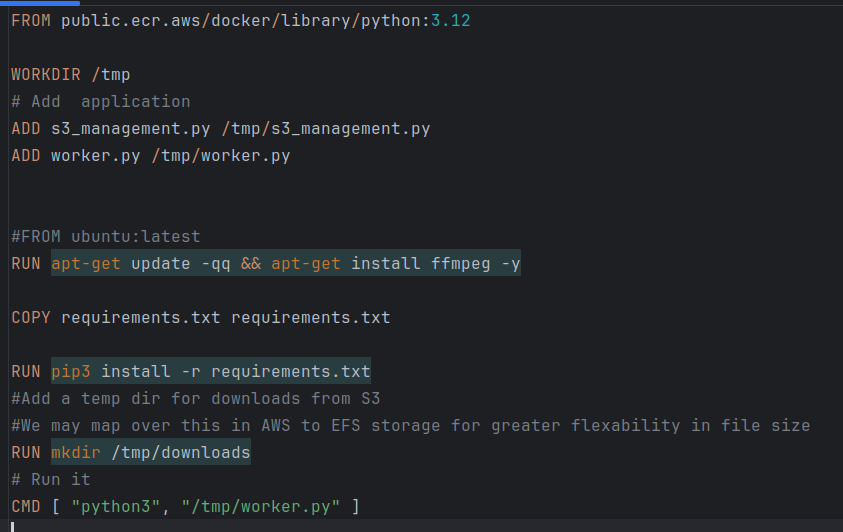

FROM public.ecr.aws/docker/library/python:3.12

WORKDIR /tmp

# Add sample application

ADD app.py /tmp/app.py

ADD objects.py /tmp/objects.py

ADD hash.py /tmp/hash.py

COPY templates /tmp/templates

COPY static /tmp/static

COPY requirements.txt requirements.txt

RUN pip3 install -r requirements.txt

EXPOSE 8080

# Run it

CMD [ "waitress-serve", "app:app" ]

#!/bin/bash

docker build --tag vacuumflask .

imageid=$(docker image ls | grep -w "vacuumflask" | awk '{print $3}')

docker run --env-file "/home/colin/python/blog2/vacuumflask/.env" \

--volume /home/colin/python/blog2/vacuumflask/data:/tmp/data \

-p 8080:8080 \

"$imageid"

#!/bin/bash

destination="beanstalk/"

zipPrefix="vacuumflask-"

zipPostfix=$(date '+%Y%m%d')

zipFileName="$zipPrefix$zipPostfix.zip"

mkdir "$destination"

cp -a templates/. "$destination/templates"

cp -a static/. "$destination/static"

cp app.py "$destination"

cp Dockerfile "$destination"

cp hash.py "$destination"

cp objects.py "$destination"

cp requirements.txt "$destination"

cd "$destination"

zip -r "../$zipFileName" "."

cd ../

rm -r "$destination"

scp "$zipFileName" project:blog2

scp docker-build-run.sh project:blog2

ssh project

Next weekend I’ll need to figure out how to get it working with elastic-beanstalk and then work on feature parity.