Fully automated K3S hosting

2025-03-17:

I’ve spent the last four days implementing k3s in my auto scale group in AWS. With the cost of the AKS control plane being $70 a month, creative solutions that still allowed enhanced automation and control are desired. Along the way I automated the process of creating new auto scale templates. With a Postgres 15.4 rds servers-less back-end for backing up the k3s system. When new instances are added they are immediately acclimated into the k3s system and everything is load balanced by the Traefik service on all nodes. Coupled with some automated dns management to handle changes in public / private ips. One script handles new instances via user data and another monitors from the outside and updates public dns to ensure that any new instances are folded in.

source /media/vacuum-data/update_internal_dns_auto.sh

#Kubernetes related

sudo curl -sSL https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

mkdir /tmp/working

chmod 777 /tmp/working

K3S_URL=$(cat /media/vacuum-data/k3s/k3s_url)

K3S_TOKEN=$(cat /media/vacuum-data/k3s/k3s_token)

# Get the secret value and store it in a variable

secret_string=$(aws secretsmanager get-secret-value \

--secret-id "$SECRET_ARN" \

--query 'SecretString' \

--output text)

# Parse the JSON and extract the values using jq

# Note: You'll need to install jq if not already installed: sudo yum install -y jq

K3S_POSTGRES_USER=$(echo $secret_string | jq -r '.K3S_POSTGRES_USER')

K3S_POSTGRES_PASSWORD=$(echo $secret_string | jq -r '.K3S_POSTGRES_PASSWORD')

POSTGRESS_SERVER=$(echo $secret_string | jq -r '.POSTGRES_SERVER')

con="postgres://$K3S_POSTGRES_USER:$K3S_POSTGRES_PASSWORD@$POSTGRESS_SERVER:5432/kubernetes"

postgres_conn_k3s=${con}

echo "postgres_conn_k3s is set to $postgres_conn_k3s"

# Download the RDS CA bundle

curl -O https://truststore.pki.rds.amazonaws.com/global/global-bundle.pem

# For k3s configuration, you'll want to move it to a permanent location

sudo mkdir -p /etc/kubernetes/pki/

sudo mv global-bundle.pem /etc/kubernetes/pki/rds-ca.pem

#ECS related

if [ -d /etc/ecs ]; then

echo "ECS_CLUSTER=vacuumflask_workers" > /etc/ecs/ecs.config

echo "ECS_BACKEND_HOST=" >> /etc/ecs/ecs.config

#TODO: set hostname; set name in /etc/hosts

#TODO: register with ALB.

fi

MAX_ATTEMPTS=60 # 5 minutes maximum wait time

ATTEMPT=0

API_URL="https://vacuumhost1.internal.cmh.sh:6443"

# Check if a k3s node is already online

response=$(curl -s -o /dev/null -w "%{http_code}" \

--connect-timeout 5 \

--max-time 10 \

--insecure \

"$API_URL")

if [ $? -eq 0 ] && [ "$response" -eq 401 ]; then

curl -sfL https://get.k3s.io | sh -s - server \

--token=${K3S_TOKEN} \

--datastore-endpoint=${postgres_conn_k3s} \

--log /var/log/k3s.log \

--tls-san=${API_URL}

else

# Install k3s with PostgreSQL as the datastore

#this is only if there isn't an existing k3s node

curl -sfL https://get.k3s.io | sh -s - server \

--write-kubeconfig-mode=644 \

--datastore-endpoint=${postgres_conn_k3s} \

--log /var/log/k3s.log \

--datastore-cafile=/etc/kubernetes/pki/rds-ca.pem \

--token=${K3S_TOKEN} \

# --tls-san=${K3S_URL} \

fi

echo "Waiting for k3s API server to start at $API_URL..."

while [ $ATTEMPT -lt $MAX_ATTEMPTS ]; do

# Perform curl with timeout and silent mode

response=$(curl -s -o /dev/null -w "%{http_code}" \

--connect-timeout 5 \

--max-time 10 \

--insecure \

"$API_URL")

if [ $? -eq 0 ] && [ "$response" -eq 401 ]; then

echo "K3s API server is ready!"

break;

else

ATTEMPT=$((ATTEMPT + 1))

remaining=$((MAX_ATTEMPTS - ATTEMPT))

echo "Waiting... (got response code: $response, attempts remaining: $remaining)"

sleep 5

fi

done

if [ $ATTEMPT -eq $MAX_ATTEMPTS ]; then

echo "K3s API server did not start in time. Exiting."

exit 1

fi

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

pwd=$(aws ecr get-login-password)

echo $pwd | sudo docker login --username AWS --password-stdin 123456789.dkr.ecr.us-east-1.amazonaws.com

kubectl delete secret regcred --namespace=default

# Create a secret named 'regcred' in your cluster

kubectl create secret docker-registry regcred \

--docker-server=123456789.dkr.ecr.us-east-1.amazonaws.com \

--docker-username=AWS \

--docker-password=${pwd} \

--namespace=default

kubectl create secret tls firstlast-tls \

--cert=/media/vacuum-data/vacuum-lb/ssl/wild.firstlast.dev.25.pem \

--key=/media/vacuum-data/vacuum-lb/ssl/wild.firstlast.dev.25.key \

--namespace=default

kubectl create secret tls cmh-tls \

--cert=/media/vacuum-data/vacuum-lb/ssl/wild.cmh.sh.crt \

--key=/media/vacuum-data/vacuum-lb/ssl/wild.cmh.sh.key \

--namespace=default

helm repo add traefik https://traefik.github.io/charts

helm repo update

helm install traefik traefik/traefik --namespace traefik --create-namespace

kubectl apply -f https://raw.githubusercontent.com/traefik/traefik/v2.10/docs/content/reference/dynamic-configuration/kubernetes-crd-definition-v1.yml

cd /media/vacuum-data/k3s

source /media/vacuum-data/k3s/setup-all.sh prod

Adventures in K3S in 2025

2025-03-09:

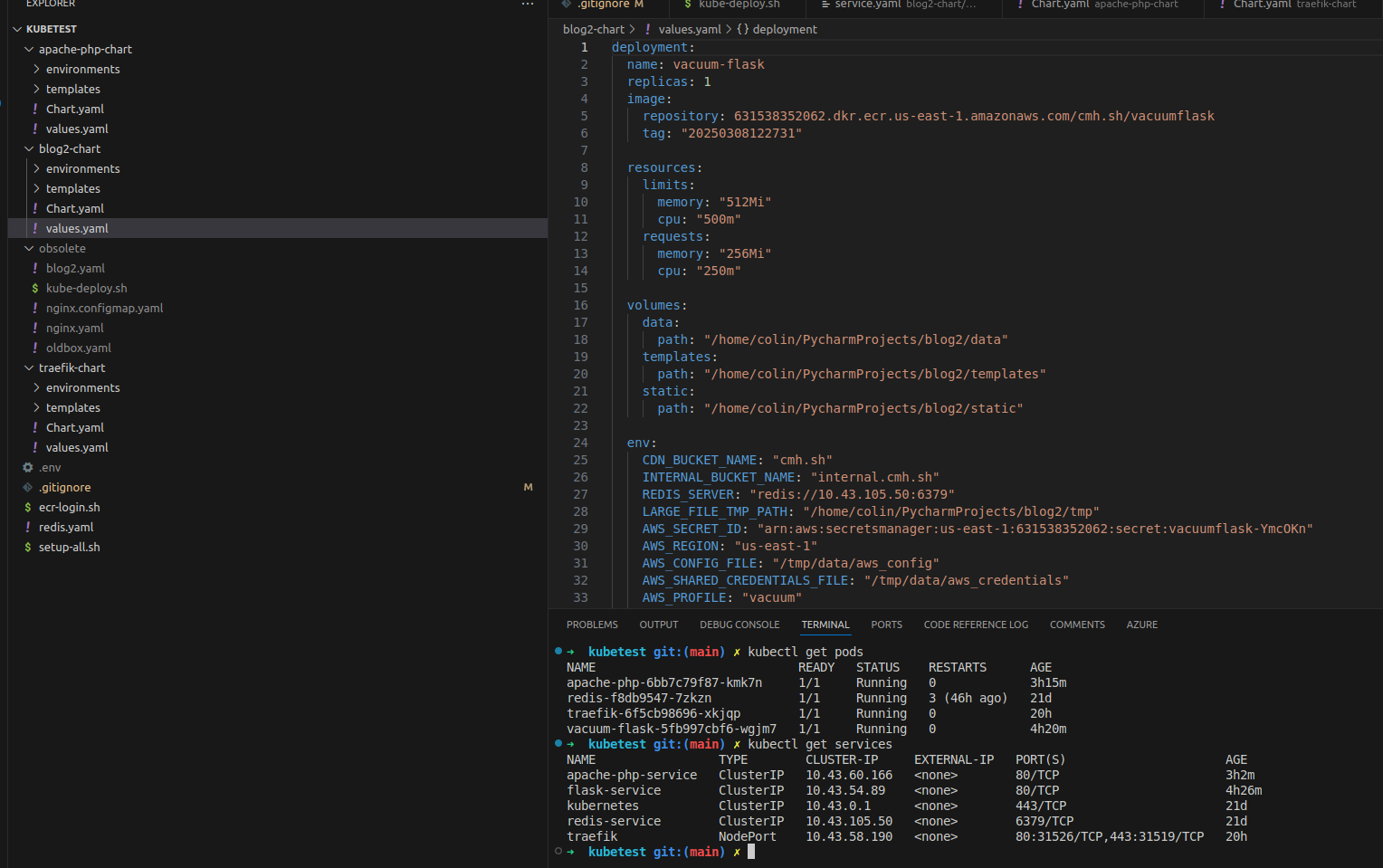

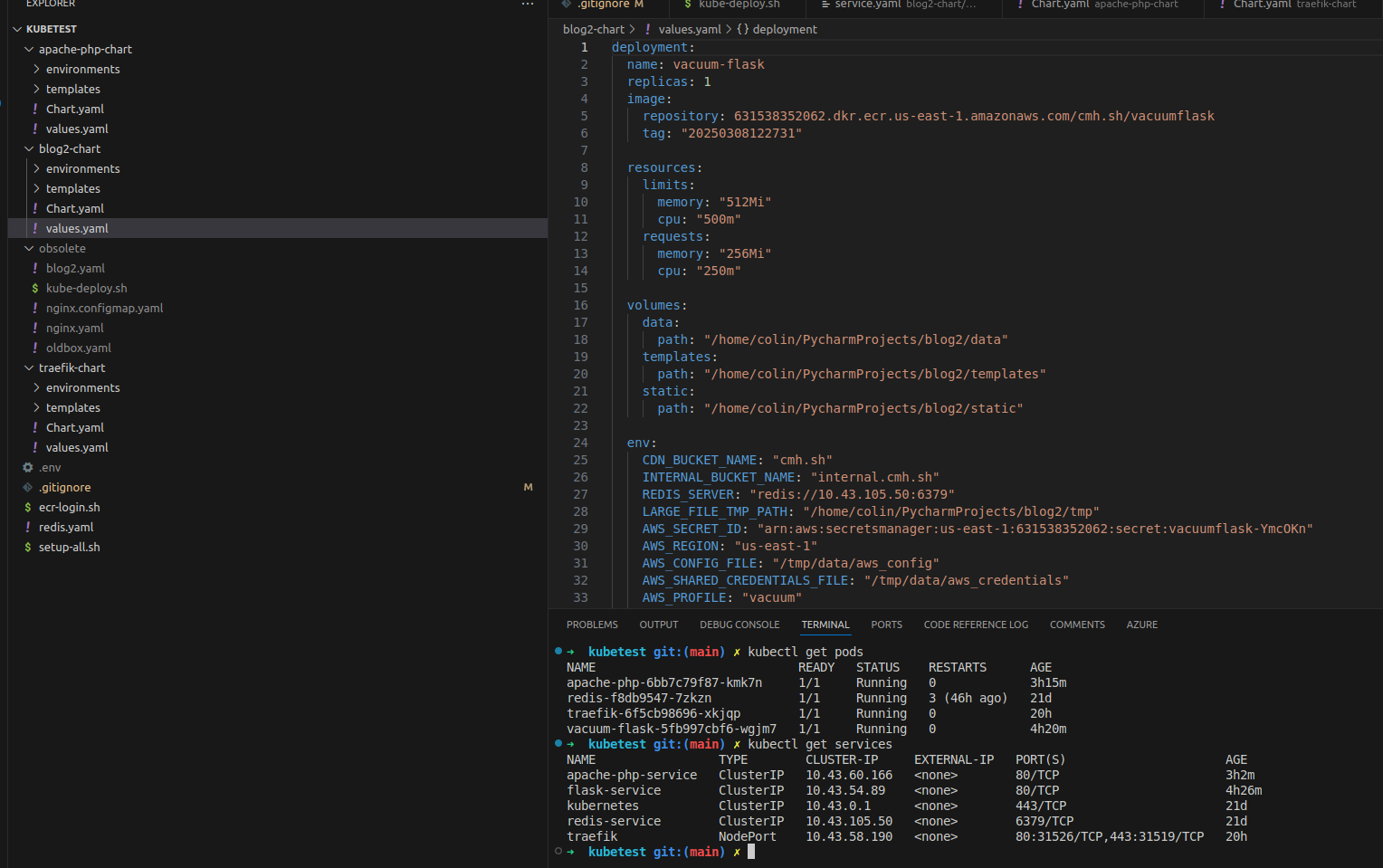

The last two weekends when I wasn’t visiting my daughter at University I started work on converting my containerized applications into a kubernetes cluster. I wanted to start local on my laptop to work out the kinks and relearn my way around. I’d setup a K3S cluster years ago using three Odroid M1s and setup 3 vms on 3 of my rack mounted hypervisors as a six node K3s cluster. All it ever hosted was a PostgreSQL database which I never really used for much of anything. None of my products at work were anywhere near implementing as complicated as kubernetes. Times are changing and it’s time to pick the kubectl back up. I started by getting K3S setup on my local laptop and then asked Amazon Q to help get me started writing some configuration files for my existing containerized services. What I immediately found was that I wanted to parameterize the files that it generated so of course I wrote a little bash script to swap out my variables in the kubernetes template yaml file. This weekend I got all the containers running and quickly discovered that I didn’t need a containerized nginx load balancer because it was better to be using a service aware load balancer like traefik. When I looked into how to connect all my containers I quickly realized that the problem I had been solving with bash had already been solved with Helm. With my new found understanding of how helm fit into the kubernetes ecosystem, I set to work today converting my templates over into helm charts.

my diy helm

#!/bin/bash

if [ -z "$1" ]

then

echo "No config supplied"

exit 1

fi

if [ ! -f "$1" ]

then

echo "File $1 does not exist"

exit 1

fi

export $(cat .env | xargs)

envsubst < $1 > $1.tmp

kubectl apply -f $1.tmp

rm $1.tmp

kubectl get pods

Handy kubectl commands

- kubectl get pods

- kubectl logs podid

- kubectl describe pod podid

- kubectl get services

- helm template apache-php ./apache-php-chart -f environments/values-dev.yaml

- helm uninstall apache-php

- kubectl get endpoints flask-service

- kubectl get ingressroutes -A

- helm install blog2-dev ./blog2-chart -f blog2-chart/environments/values-dev.yaml

terraform vs kubernetes an under the weather Saturday

2/11/2023:

The home lab:

Saturday plans

We had a health screening this morning and I was already a bit under the weather. I have a project coming up for work where we will be using kuberneties and we already have many projects that use terraform. A few weeks ago I attended a good terraform training put on by aws. After updating my local home lab, I'm going to try to stay motivated to dig into the two tech stacks above.

Going to try and work through this blog on proxmox and kuberneties. Running a Kubernetes Cluster Using Proxmox and Rancher Update - Saturday night - This project went ok at first, I got all 3 linux machines created. I followed the instructions and got the cluster "provisioning" in ranger but it never made it out of that state. I later discovered that the "main" kube controller ran out of disk space with the 20gb of space that the directions had. For some reason the lvm had only set aside 10gb for the main drive. Try as I might I managed to get the physical drive extended but the LVM was being a pain in the ass. I ended up nuking the main and i accidently nuked one of the workers while rage quiting. If I feel better in the morning I'm going back to woodworking. My first day with kuberneties didn't go well although ranger looks neat.

Saturday plans

We had a health screening this morning and I was already a bit under the weather. I have a project coming up for work where we will be using kuberneties and we already have many projects that use terraform. A few weeks ago I attended a good terraform training put on by aws. After updating my local home lab, I'm going to try to stay motivated to dig into the two tech stacks above.

Going to try and work through this blog on proxmox and kuberneties. Running a Kubernetes Cluster Using Proxmox and Rancher Update - Saturday night - This project went ok at first, I got all 3 linux machines created. I followed the instructions and got the cluster "provisioning" in ranger but it never made it out of that state. I later discovered that the "main" kube controller ran out of disk space with the 20gb of space that the directions had. For some reason the lvm had only set aside 10gb for the main drive. Try as I might I managed to get the physical drive extended but the LVM was being a pain in the ass. I ended up nuking the main and i accidently nuked one of the workers while rage quiting. If I feel better in the morning I'm going back to woodworking. My first day with kuberneties didn't go well although ranger looks neat.