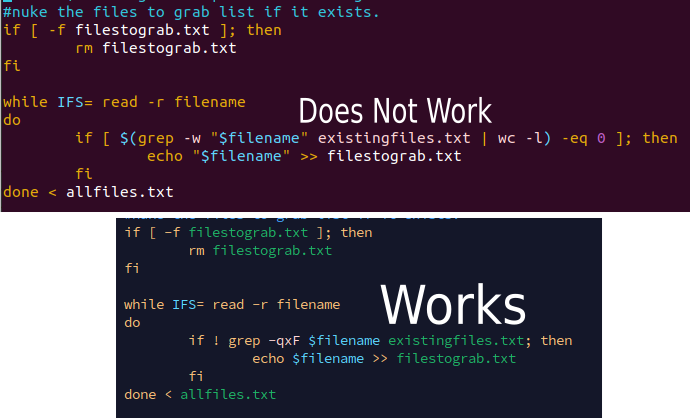

if [] if [[]] if [ $() ];At the end of the day one of my guys figured it out.

#!/bin/bash

secretobj=$(aws secretsmanager get-secret-value --secret-id "product/service/reporting" --region us-east-1)

rptpassword=$(echo $secretobj | jq ".SecretString" | sed 's/\\//g' | sed -E 's/^"//; s/"$//' | jq ".password" | sed -E 's/^"//; s/"$//' )

logfilename="service-report-log_$(date +'%Y-%m-%d').log"

echo "starting report run $(date '+%Y%m%d')" > "$logfilename"

ls service-reports | sort > existingfiles.txt

allfiles=$(sshpass -p "$rptpassword" sftp -oBatchMode=no -b - service-reports << !

cd ppreports/outgoing

ls

exit

!

)

#cleanup output from sftp server

echo "$allfiles" | sed -e '1,/^sftp> ls$/d' -e '/^sftp> cd ppreports\/outgoing$/d' -e '/^sftp> exit/d' | sort > allfiles.txt

echo "all files on server:\n $allfiles" >> "$logfilename"

#nuke the files to grab list if it exists.

if [ -f filestograb.txt ]; then

rm filestograb.txt

fi

while IFS= read -r filename

do

if ! grep -qxF $filename existingfiles.txt; then

echo $filename >> filestograb.txt

fi

done < allfiles.txt

comm -23 allfiles.txt existingfiles.txt > filestograb2.txt

if [ -f filestograb.txt ]; then

echo "files to grab:\n" >> "$logfilename"

cat filestograb.txt >> "$logfilename"

echo "starting scp copy\n"

while IFS= read -r filename

do

sshpass -p "$rptpassword" scp service-reports:ppreports/outgoing/$filename service-reports/$filename

done < filestograb.txt

else

echo "No files missing?"

fi

echo "ending report run $(date '+%Y%m%d')" >> "$logfilename"

So this week has been a lot. I can’t talk about most of it but there was a lot of good bash script to come out of it. Today also marks the end of the road for one of my favorite people on this planet, my Grandpa CL. May he rest in piece. This is for test best as he wasn’t doing well and If I somehow make it to 99, I hope I can go out in my own home just like him. So back to this weeks work:

New to me this week:

- Reading load balancer logs

- Sed on Mac is different than GNU Sed. Props to homebrew for gsed to mac.

- Finding and cat’ing all files recursively in folders

- Using awk to count things

- doing math on the command line

- merge files line by line with paste

- wc -l #count the lines in a file

- du -sh path to dir to size

#grab the file list from all the s3 buckets:

#!/bin/bash

echo "Searching"

while IFS= read -r bucket

do

if [ ! -f "bucket_contents/$bucket.txt" ]; then

echo "Grabbing bucket: $bucket"

aws s3 ls "s3://$bucket" --recursive --profile events > "bucket_contents/$bucket.txt"

fi

done < gs_buckets.txt

#Loop through buckets and grep for matching file names.

#!/bin/bash

echo "Searching"

while IFS= read -r bucket

do

if [ -f "bucket_contents/$bucket.txt" ]; then

echo "Searching bucket: $bucket"

while IFS= read -r filename

do

cat "bucket_contents/$bucket.txt" | gsed -E 's/^[0-9-]+\s+[0-9:]+\s+[0-9]+\s+//' | grep -e "$filename" >>filelog.txt

done < filelist.txt

fi

done < gs_buckets.txt

#Mac Sed != Linux Sed

#Cat all of the files in all of the folders

find . -type f -exec cat {} +

#Read all files from an aws load balancer, return code 200

find . -type f -exec cat {} + | awk '{print $10 "," $12 "," $13}' | grep -w 200 | sort | uniq -c | sort | awk '{print $1 "," $2}' > ../test.csv

#Take the port number off an ip address

gsed -E 's/:[0-9]+,/,/'

# Use AWK to count the number of lines between two numbers in a file.

#!/bin/bash

if [ $# -eq 3 ]; then

topNo=$1

botNo=$2

total=$3

date

echo "Remaining:"

left=$(awk -F'/' '{print $2}' movedir.txt | \

awk -v top="$topNo" -v bottom="$botNo" '$1 > bottom && $1 <= top {count++} END {print count}')

echo "There are $left directories left to process"

#Do math and output the result

pctleft=$(bc -l <<< "1-($left/$total)")

echo "$pctleft complete"

else

echo "(top no) (bottom no) (total)"

fi

This week has seen a lot of my spare time trying to finish solving a problem I encountered at last weekend's tail end. Why my EC2 vm with an IAM profile that should grant it access to an s3 bucket can’t use the AWS PHP SDK to connect to the S3 bucket. I kept getting variations of this exception:

| [Fri Jun 28 22:47:50.774779 2024] [php:error] [pid 38165] [client 1.2.3.4 :34135] PHP Fatal error: Uncaught Error: Unknown named parameter $instance in /home/notarealuser/vendor/aws/aws-sdk-php/src/Credentials/CredentialProvider.php:74\nStack trace:\n#0 /home/notarealuser/vendor/aws/aws-sdk-php/src/Credentials/CredentialProvider.php(74): call_user_func_array()\n#1 /home/notarealuser/vendor/aws/aws-sdk-php/src/ClientResolver.php(263): Aws\\Credentials\\CredentialProvider::defaultProvider()\n#2 /home/notarealuser/vendor/aws/aws-sdk-php/src/AwsClient.php(158): Aws\\ClientResolver->resolve()\n#3 /home/notarealuser/vendor/aws/aws-sdk-php/src/Sdk.php(270): Aws\\AwsClient->__construct()\n#4 /home/notarealuser/vendor/aws/aws-sdk-php/src/Sdk.php(245): Aws\\Sdk->createClient()\n#5 /var/www/blog/s3upload.php(35): Aws\\Sdk->__call()\n#6 {main}\n thrown in /home/notarealuser/vendor/aws/aws-sdk-php/src/Credentials/CredentialProvider.php on line 74 |

I didn’t want to but I ended up adding the aws cli to my test box and confirmed that I could indeed access my aws bucket without using any hard coded credentials on the box using the IAM profile for the ec2 running this code. I ended up calling the AWS CLI from my code directly. This isn’t ideal, but I’ve wasted enough time this week fighting with this bug. In other news ChatGPT is pretty fantastic at writing regular expressions and translating english into sed commands for processing text data. Because I had to use the AWS CLI, I was getting the contents of my S3 Bucket back as text that wasn’t in a format that was ideal for consuming by code. Here is the prompt I used and the response.

I validated that the sed was correct on the website https://sed.js.org/

ChatGPT also provided a useful breakdown of the sed command that it wrote.

One more tool for my toolbox for working with files in nix environments.