Hardware Upgrade and Proxmox 9.1

2025-09-21:

With the retirement of another data center stack, I had the opportunity to refresh a piece of iron. I ended up choosing to replace an R530 with eight 3.5-inch drives and the oldest IDRAc in my lab. Thankfully, this time the server arrived from FedEx with only slightly bent rails. I upgraded the server from Windows 2022 to 2025, played around with Windows for a few days, and then decided to try out Proxmox 9.1. Proxmox has come a long way since Proxmox seven. While trying to move virtual machines around after getting my NFS servers, I was seeing the strangest behavior. It turned out that I had plugged in an Ethernet 5e into a ten-gigabit Ethernet. Everything looked fine, but the connections were volatile.

The next step in my virtualization adventure was to migrate and upgrade a licensed Windows 10 VM. Once my network situation was resolved, it was just a matter of time before I was able to offload the VM and its drive and get them on the new host. After some Windows updates, I set about getting it upgraded to Windows 11. The process involved:

- Adding a virtual TPM

- Adding a virtual EFI Disk

- Switched the BIOS to OVMF

- Used a win 11 usb drive, cleaned up some bad entries in the MBR

- Repaired the MBR

- Converted the disk to GPT using MBR2GPT

- Ran the Windows 11 PC check and verified that everything is order

- Upgraded to Windows 11

Fathers day weekend with OKTA

2025-06-15:

This Father's Day weekend, I decided to play around with OKTA as an identity provider for my various creations. I spent half a day messing with AI and the Okta SDK and getting virtually nowhere. After a break, I went back and started fresh the old-fashioned way, quickly made progress, found the system logs in Okta, and promptly was in business. My blog now supports logging in to the admin interface with OKTA. Today, I picked up where I had left off, retooled a bit of devops automation for my hosting environment, and started working on user authentication into OKTA from Python. Once again, today, Google was the most useful resource, and I found the documentation page I needed to authenticate a user against OKTA.

from getpass import getpass

import requests

import json

import os

import logging

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

username = "colin@cmh.sh"

password = getpass()

session = requests.Session()

session.headers.update({

'Accept': "application/json",

'Content-Type': "application/json",

'Cache-Control': "no-cache",

})

url_authn = f"{os.environ.get("OKTA_ORG_URL")}/api/v1/authn"

logger.info(f"auth URL: {url_authn}")

payload_authn = json.dumps({

"username": username,

"password": password,

"options": {"warnBeforePasswordExpired": True,

"multiOptionalFactorEnroll": True},

})

response = session.post(url_authn, data=payload_authn)

logger.info(f"auth response: {response.text}")

resp_obj = json.loads(response.text)

if resp_obj["status"] != "SUCCESS" and resp_obj["status"] != "MFA_REQUIRED":

logger.error(f"auth response: {resp_obj["status"]}")

raise "Login failed"

if resp_obj["status"] == "MFA_REQUIRED":

factors = resp_obj["_embedded"]["factors"]

logger.info(f"factors: {factors}")

factorId = getpass(prompt="factor id: ")

mfa_otp_url = f"{os.environ.get("OKTA_ORG_URL")}/api/v1/authn/factors/{factorId}/verify"

#https://developer.okta.com/docs/reference/api/authn/#verify-totp-factor

otp = getpass(prompt="OTP:")

mfa_payload = json.dumps({

"stateToken": resp_obj["stateToken"],

"passCode": otp

})

logger.info(f"MFA URL: {mfa_otp_url}")

mfa_resp = session.post(url=mfa_otp_url, data=mfa_payload)

logger.info(f"mfa response: {mfa_resp.text}")

resp_obj = json.loads(mfa_resp.text)

if resp_obj["status"] != "SUCCESS":

logger.error(f"mfa response: {resp_obj["status"]}")

raise "MFA failed"

logger.info(f"Successfully logged into okta. sessionToken: {resp_obj['sessionToken']} userID: {resp_obj['_embedded']['user']['id']}")

The year is flying by

2025-05-18:

It’s been a minute. I started messing around with terraform and server-less tech to host my bs. What I’ve discovered is that for the cost of a nat-gateway I could just have a project box thats always-on. Even with the cost of storage, it’s still cheaper with Ris than a Nat-gateway and server-less tech. I haven’t spent much time on tech projects since my last post honestly though. I’ve mostly been playing R.E.P.O. with a team from Discord. So right now my online empire is still running on an ec2 in docker containers. This is quite cost efficient, but still requires regular maintenance. Going serverless scales better but is not cheaper on the onset. For my work I need to explore more use of terraform as it relates to EKS, but the EKS is a minimum of $70 a month for the control plane and I’ve heard estimates on reddit that it’s minimum footprint is around $400 a month which is WAY Over my $50/mo budget for my aws hosted bs. This weekend I did some house cleaning on my subnets and fixed some long standing issues. I then set about creating a health check lambda that can be used to check the health and access of my websites resources. I still need to integrate it into my existing lambda terraform project, but that will be for another weekend.

Fully automated K3S hosting

2025-03-17:

I’ve spent the last four days implementing k3s in my auto scale group in AWS. With the cost of the AKS control plane being $70 a month, creative solutions that still allowed enhanced automation and control are desired. Along the way I automated the process of creating new auto scale templates. With a Postgres 15.4 rds servers-less back-end for backing up the k3s system. When new instances are added they are immediately acclimated into the k3s system and everything is load balanced by the Traefik service on all nodes. Coupled with some automated dns management to handle changes in public / private ips. One script handles new instances via user data and another monitors from the outside and updates public dns to ensure that any new instances are folded in.

source /media/vacuum-data/update_internal_dns_auto.sh

#Kubernetes related

sudo curl -sSL https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash

mkdir /tmp/working

chmod 777 /tmp/working

K3S_URL=$(cat /media/vacuum-data/k3s/k3s_url)

K3S_TOKEN=$(cat /media/vacuum-data/k3s/k3s_token)

# Get the secret value and store it in a variable

secret_string=$(aws secretsmanager get-secret-value \

--secret-id "$SECRET_ARN" \

--query 'SecretString' \

--output text)

# Parse the JSON and extract the values using jq

# Note: You'll need to install jq if not already installed: sudo yum install -y jq

K3S_POSTGRES_USER=$(echo $secret_string | jq -r '.K3S_POSTGRES_USER')

K3S_POSTGRES_PASSWORD=$(echo $secret_string | jq -r '.K3S_POSTGRES_PASSWORD')

POSTGRESS_SERVER=$(echo $secret_string | jq -r '.POSTGRES_SERVER')

con="postgres://$K3S_POSTGRES_USER:$K3S_POSTGRES_PASSWORD@$POSTGRESS_SERVER:5432/kubernetes"

postgres_conn_k3s=${con}

echo "postgres_conn_k3s is set to $postgres_conn_k3s"

# Download the RDS CA bundle

curl -O https://truststore.pki.rds.amazonaws.com/global/global-bundle.pem

# For k3s configuration, you'll want to move it to a permanent location

sudo mkdir -p /etc/kubernetes/pki/

sudo mv global-bundle.pem /etc/kubernetes/pki/rds-ca.pem

#ECS related

if [ -d /etc/ecs ]; then

echo "ECS_CLUSTER=vacuumflask_workers" > /etc/ecs/ecs.config

echo "ECS_BACKEND_HOST=" >> /etc/ecs/ecs.config

#TODO: set hostname; set name in /etc/hosts

#TODO: register with ALB.

fi

MAX_ATTEMPTS=60 # 5 minutes maximum wait time

ATTEMPT=0

API_URL="https://vacuumhost1.internal.cmh.sh:6443"

# Check if a k3s node is already online

response=$(curl -s -o /dev/null -w "%{http_code}" \

--connect-timeout 5 \

--max-time 10 \

--insecure \

"$API_URL")

if [ $? -eq 0 ] && [ "$response" -eq 401 ]; then

curl -sfL https://get.k3s.io | sh -s - server \

--token=${K3S_TOKEN} \

--datastore-endpoint=${postgres_conn_k3s} \

--log /var/log/k3s.log \

--tls-san=${API_URL}

else

# Install k3s with PostgreSQL as the datastore

#this is only if there isn't an existing k3s node

curl -sfL https://get.k3s.io | sh -s - server \

--write-kubeconfig-mode=644 \

--datastore-endpoint=${postgres_conn_k3s} \

--log /var/log/k3s.log \

--datastore-cafile=/etc/kubernetes/pki/rds-ca.pem \

--token=${K3S_TOKEN} \

# --tls-san=${K3S_URL} \

fi

echo "Waiting for k3s API server to start at $API_URL..."

while [ $ATTEMPT -lt $MAX_ATTEMPTS ]; do

# Perform curl with timeout and silent mode

response=$(curl -s -o /dev/null -w "%{http_code}" \

--connect-timeout 5 \

--max-time 10 \

--insecure \

"$API_URL")

if [ $? -eq 0 ] && [ "$response" -eq 401 ]; then

echo "K3s API server is ready!"

break;

else

ATTEMPT=$((ATTEMPT + 1))

remaining=$((MAX_ATTEMPTS - ATTEMPT))

echo "Waiting... (got response code: $response, attempts remaining: $remaining)"

sleep 5

fi

done

if [ $ATTEMPT -eq $MAX_ATTEMPTS ]; then

echo "K3s API server did not start in time. Exiting."

exit 1

fi

export KUBECONFIG=/etc/rancher/k3s/k3s.yaml

pwd=$(aws ecr get-login-password)

echo $pwd | sudo docker login --username AWS --password-stdin 123456789.dkr.ecr.us-east-1.amazonaws.com

kubectl delete secret regcred --namespace=default

# Create a secret named 'regcred' in your cluster

kubectl create secret docker-registry regcred \

--docker-server=123456789.dkr.ecr.us-east-1.amazonaws.com \

--docker-username=AWS \

--docker-password=${pwd} \

--namespace=default

kubectl create secret tls firstlast-tls \

--cert=/media/vacuum-data/vacuum-lb/ssl/wild.firstlast.dev.25.pem \

--key=/media/vacuum-data/vacuum-lb/ssl/wild.firstlast.dev.25.key \

--namespace=default

kubectl create secret tls cmh-tls \

--cert=/media/vacuum-data/vacuum-lb/ssl/wild.cmh.sh.crt \

--key=/media/vacuum-data/vacuum-lb/ssl/wild.cmh.sh.key \

--namespace=default

helm repo add traefik https://traefik.github.io/charts

helm repo update

helm install traefik traefik/traefik --namespace traefik --create-namespace

kubectl apply -f https://raw.githubusercontent.com/traefik/traefik/v2.10/docs/content/reference/dynamic-configuration/kubernetes-crd-definition-v1.yml

cd /media/vacuum-data/k3s

source /media/vacuum-data/k3s/setup-all.sh prod

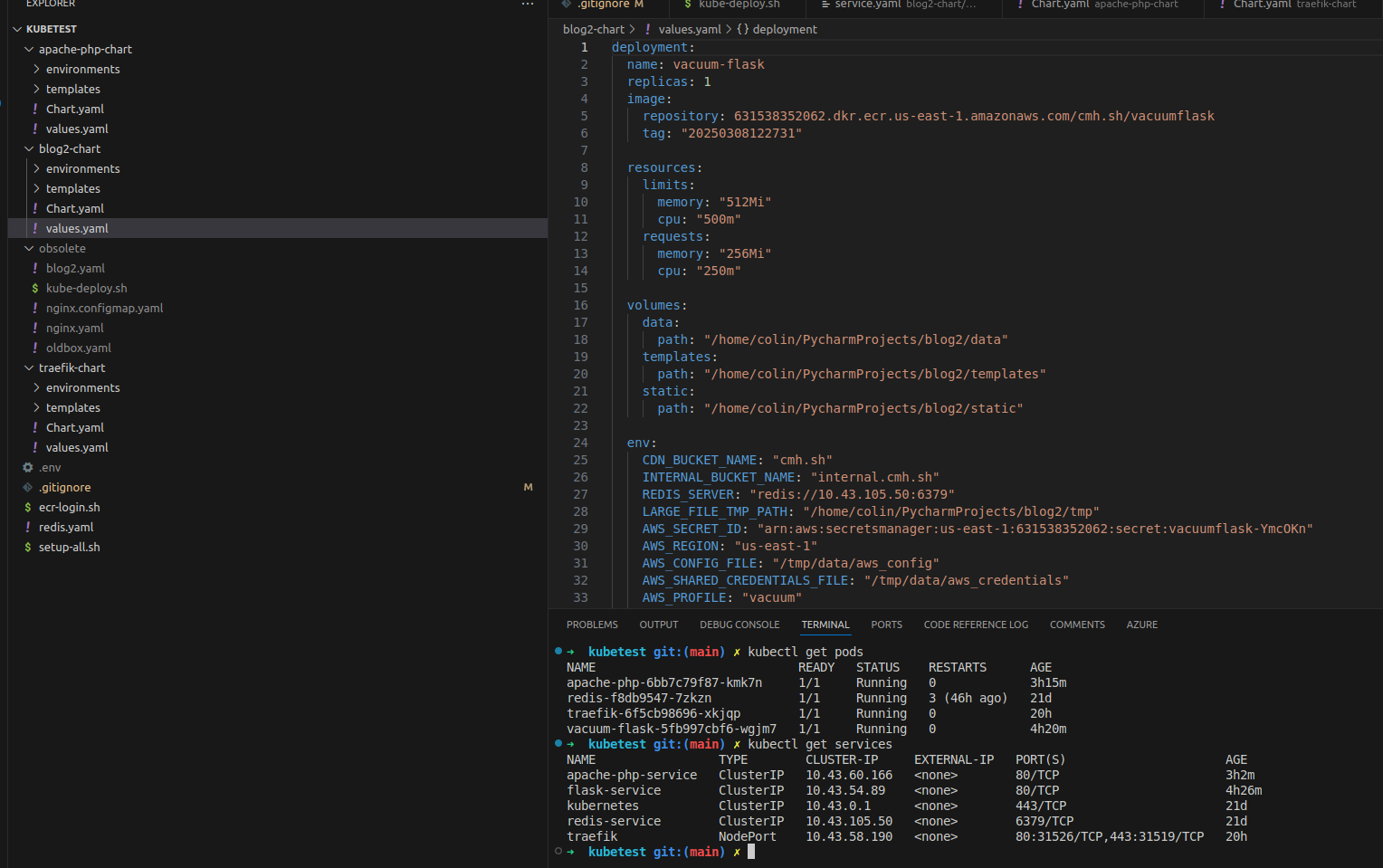

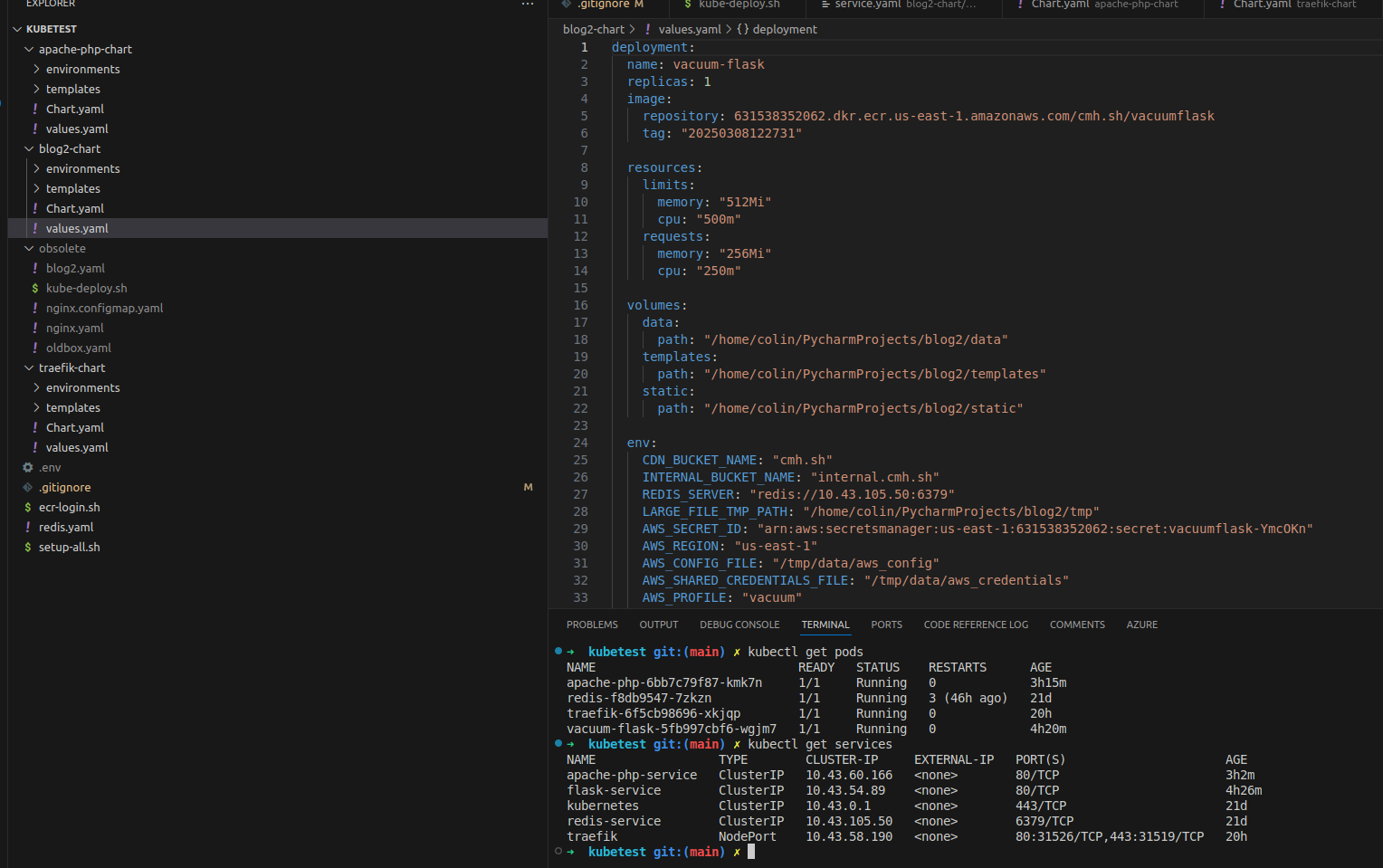

Adventures in K3S in 2025

2025-03-09:

The last two weekends when I wasn’t visiting my daughter at University I started work on converting my containerized applications into a kubernetes cluster. I wanted to start local on my laptop to work out the kinks and relearn my way around. I’d setup a K3S cluster years ago using three Odroid M1s and setup 3 vms on 3 of my rack mounted hypervisors as a six node K3s cluster. All it ever hosted was a PostgreSQL database which I never really used for much of anything. None of my products at work were anywhere near implementing as complicated as kubernetes. Times are changing and it’s time to pick the kubectl back up. I started by getting K3S setup on my local laptop and then asked Amazon Q to help get me started writing some configuration files for my existing containerized services. What I immediately found was that I wanted to parameterize the files that it generated so of course I wrote a little bash script to swap out my variables in the kubernetes template yaml file. This weekend I got all the containers running and quickly discovered that I didn’t need a containerized nginx load balancer because it was better to be using a service aware load balancer like traefik. When I looked into how to connect all my containers I quickly realized that the problem I had been solving with bash had already been solved with Helm. With my new found understanding of how helm fit into the kubernetes ecosystem, I set to work today converting my templates over into helm charts.

my diy helm

#!/bin/bash

if [ -z "$1" ]

then

echo "No config supplied"

exit 1

fi

if [ ! -f "$1" ]

then

echo "File $1 does not exist"

exit 1

fi

export $(cat .env | xargs)

envsubst < $1 > $1.tmp

kubectl apply -f $1.tmp

rm $1.tmp

kubectl get pods

Handy kubectl commands

- kubectl get pods

- kubectl logs podid

- kubectl describe pod podid

- kubectl get services

- helm template apache-php ./apache-php-chart -f environments/values-dev.yaml

- helm uninstall apache-php

- kubectl get endpoints flask-service

- kubectl get ingressroutes -A

- helm install blog2-dev ./blog2-chart -f blog2-chart/environments/values-dev.yaml

Slick loading of environmental variables into bash

2025-02-18:

Picked up a cool trick yesterday:

#!/bin/bash

# Load all the environment variables from the .env file

export $(cat .env | xargs)

#Take the task definition file and replace the environment variables

envsubst < tomcat-task-def-template.json > task-definition.json.tmp

if [ ! -f task-definition.json.tmp ]; then

echo "task-definition.json.tmp is missing"

exit 1

fi

full_path=$(pwd)

# Register the task definition

aws ecs register-task-definition --cli-input-json file:///${full_path}/task-definition.json.tmp

read -p "Remove the temp file? [y/n] " -n 1 -r removetempfile

if [[ $removetempfile =~ ^[Yy]$ ]]

then

rm task-definition.json.tmp

fi

Adventures in NginX

2025-01-25:

Starting last weekend I started working on building out a container that could take the place of my pair of Application Load Balancers that AWS was providing. The ALBs work great, but at $30 a month for the pair they are beyond overkill and are more expensive than all of my servers. Something else that I learned was that Amazon Q when integrated with VS Code is very decent. It can use the files open as a source of input and often has valuable troubleshooting advice. Also I’d give a shout out a Twitch user rajeshmasterofthecows that joined my stream this morning, asked good questions, and pitched in some useful advice.

user nginx;

worker_processes auto;

error_log /var/log/nginx/error.log notice;

pid /var/run/nginx.pid;

events {

worker_connections 1024;

}

http {

upstream vacuumflask {

server staticendpoint:8080 max_fails=3 fail_timeout=30s;

server dynamicendpoint:8080 max_fails=3 fail_timeout=30s;

least_conn; # Least connections

}

upstream blog1

{

server dynamicendpoint:80 max_fails=3 fail_timeout=30s;

}

server {

listen 80;

server_name blog4.cmh.sh;

return 301 https://$server_name$request_uri;

}

server {

listen 443 ssl;

server_name blog.cmh.sh blog4.cmh.sh;

location / {

proxy_pass http://vacuumflask;

proxy_set_header Host $host; # Add this line to forward the original host

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

}

#proxy_set_header Host $host;

ssl_certificate /tmp/ssl/wild.cmh.sh.crt;

ssl_certificate_key /tmp/ssl/wild.cmh.sh.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 120m;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

# Basic security headers

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

}

server {

listen 443 ssl;

server_name cmh.sh ots.cmh.sh;

location / {

proxy_pass http://blog1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host; # Add this line to forward the original host

}

#proxy_set_header Host $host;

ssl_certificate /tmp/ssl/wild.cmh.sh.crt;

ssl_certificate_key /tmp/ssl/wild.cmh.sh.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 120m;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

# Basic security headers

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

}

server {

listen 443 ssl;

server_name oldblog

location / {

proxy_pass http://blog1;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Host $host; # Add this line to forward the original host

}

#proxy_set_header Host $host;

ssl_certificate /tmp/ssl/wild.oldblog.dev.25.pem;

ssl_certificate_key /tmp/ssl/wild.oldblog.key;

ssl_session_cache shared:SSL:1m;

ssl_session_timeout 120m;

ssl_protocols TLSv1.2 TLSv1.3;

ssl_ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECtail -f /var/log/nginx/error.log

tail -f /var/log/nginx/access.log

DHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384;

ssl_prefer_server_ciphers off;

# Basic security headers

add_header Strict-Transport-Security "max-age=31536000; includeSubDomains" always;

add_header X-Frame-Options SAMEORIGIN;

add_header X-Content-Type-Options nosniff;

add_header X-XSS-Protection "1; mode=block";

}

}

deploy nginx and dns config for container restart

#!/bin/bash

destination="vacuum-lb-push"

zipPrefix="vacuum-lb-"

zipFileName="$zipPrefix$(date +%Y%m%d).zip"

mkdir $destination

while IFS= read -r file; do

cp $file $destination/$file

done < ship_list.txt

cd $destination

zip -r $zipFileName *

mv $zipFileName ../.

cd ../

rm -rf $destination

scp $zipFileName project:blog2/$zipFileName

ssh project "cd /media/vacuum-data/vacuum-lb; sudo mv /home/ubuntu/blog2/$zipFileName /media/vacuum-data/vacuum-lb/$zipFileName; sudo bash unpack.sh $zipFileName"

rm $zipFileName